ijustpostwhenimhi

1320

19

2

Here are some power draw numbers for various states of use. @StackMySwitchUp

For the SAS configuration I'm using 3 2TB HGST SAS HDDs, and the cage has a slim 120mm fan

All numbers include the pi and a usb 3.0 hub

Prev posts:

SAS Controller: https://imgur.com/gallery/Jrtu3KE

PCIe 3.0 Switch: https://imgur.com/gallery/EkGvOsj

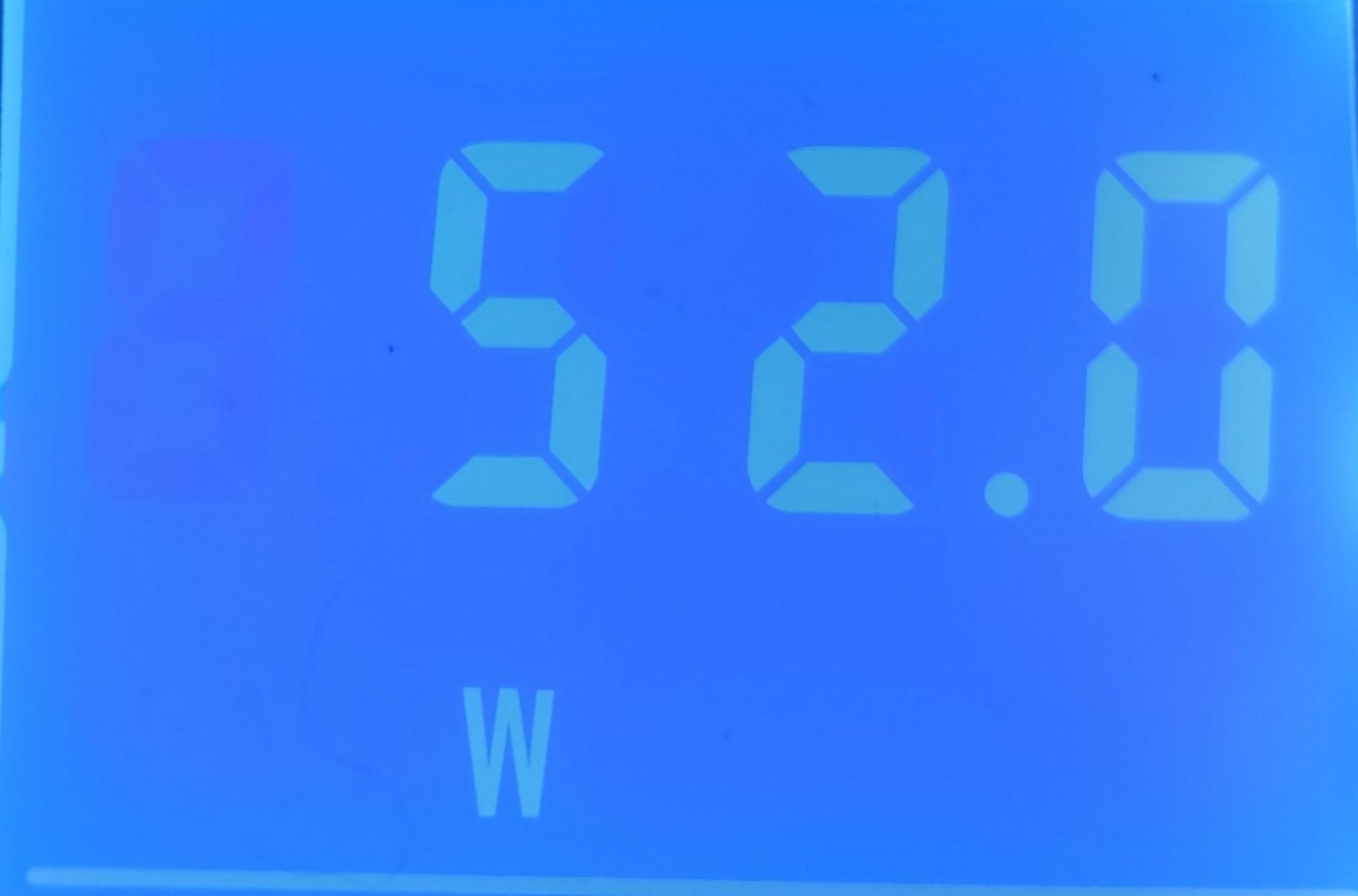

Pi /w SAS Ctrl, disks asleep

Pi /w SAS Ctrl, 3 HDD ZFS pool, idle

Pi /w SAS Ctrl, 3 HDD ZFS pool, large write operation

For the PCIe 3.0 Switch, I have it populated with a 1TB and a 128GB NVMe SSD

Pi /w PCIe 3.0 switch and 2 NVMes, idle

JackHL01

UnitConversionBot

120mm ≈ 4 6/8 inches

wyrmbear

120mm was my stripper name in college....

StackMySwitchUp

Damn thats a lot more than I expected ! Thanks for testing and the tag!!

StackMySwitchUp

You sure the pool was idle and not doing maintenance?

StackMySwitchUp

For reference, running a proxmox box on a matx 1151 board with i3 6100, 5 enterprise sata 600 disks in a truenas vm, that whole setup does 30W if not less, so I'm a little shocked

ijustpostwhenimhi

The target is 12-bay with 10g connectivity. I was looking at AIO solutions as a power reference. Something like https://www.synology.com/en-global/products/DS3622xs+ 94w Access, 54w Sleep. Hoping to get somewhere close to that.

ijustpostwhenimhi

I have a 12-bay rack enclosure with SAS backplane otw from China. At $130 shipped I'm still far from aio prices at least. The enclosure supports daisy chaining too, so that's neat if I ever needed more bays

ijustpostwhenimhi

That's impressive for an x86. My x86 server idles at 120w with max power saving. Though it's a full size board (supermicro x9srl-f). As for the pool, the 52w idle is the same with ZFS unloaded. I only get the 35w when I force a spin-down. Something to consider is the 12v source is from an older 1000w PSU so it may not be very efficient at these ranges, especially since the disks alone seem to draw 17w. The final build will have something more appropriate.

StackMySwitchUp

Couple sidenotes, the disks are all SSD, the psu is a 19v laptop brick with a dc-dc stepdown into a pico psu, in a supermicro superchassis with a powered backplane and no extra NIC yet. The cpu governor is set to powersave but it doesn't peak very high,and i haven't measured it standalone,so its a guesstimate between a laptop motherboard, a mikrotik 3011, an enterprise POE switch and this server, total draw of that whole stack is 45-55w,which I thought was pretty decent

ijustpostwhenimhi

Ah yeah, I'd love to have SSDs, but they're out of the budget, besides some cache maybe. Still, I was unaware x86 could get that low. I saw mini-pcs pulling 30w+ alone, so I figured that the pi's max 15w (in testing) already put me ahead of an x86 build. Still gonna push forward though. Going by the label and some ohms law, these HDDs are each rated at 13.6w max. and the final 6TB drives are 14.1w

StackMySwitchUp

With 6T drives you might want to consider your zpool maintenance load on the pi, it can be quite heavy on the cpu. Slower drives actually help to spread out the load because it gives the cpu more headroom. Cache and transaction logging add to that pressure. Not sure about the limited bus of the pcie you're trying. Just saying before you go all out on hardware. I can recommend the ryzen 3100 if you need an alternative, it has a tdp of 35w and runs like 10w idle afaik